The punch is deployed in production for small, medium or large-scale applications, on the cloud or on premise, for various use cases such as cybersecurity, applicative or system monitoring. In this short blog we highlight a few concrete sizing example to give you a flavor of the hardware and server requirements. Of course these numbers are illustrative.

Note in particular that when we speak of eps (event per second) we consider here 1Kb data such as logs, json or equivalent textual data.

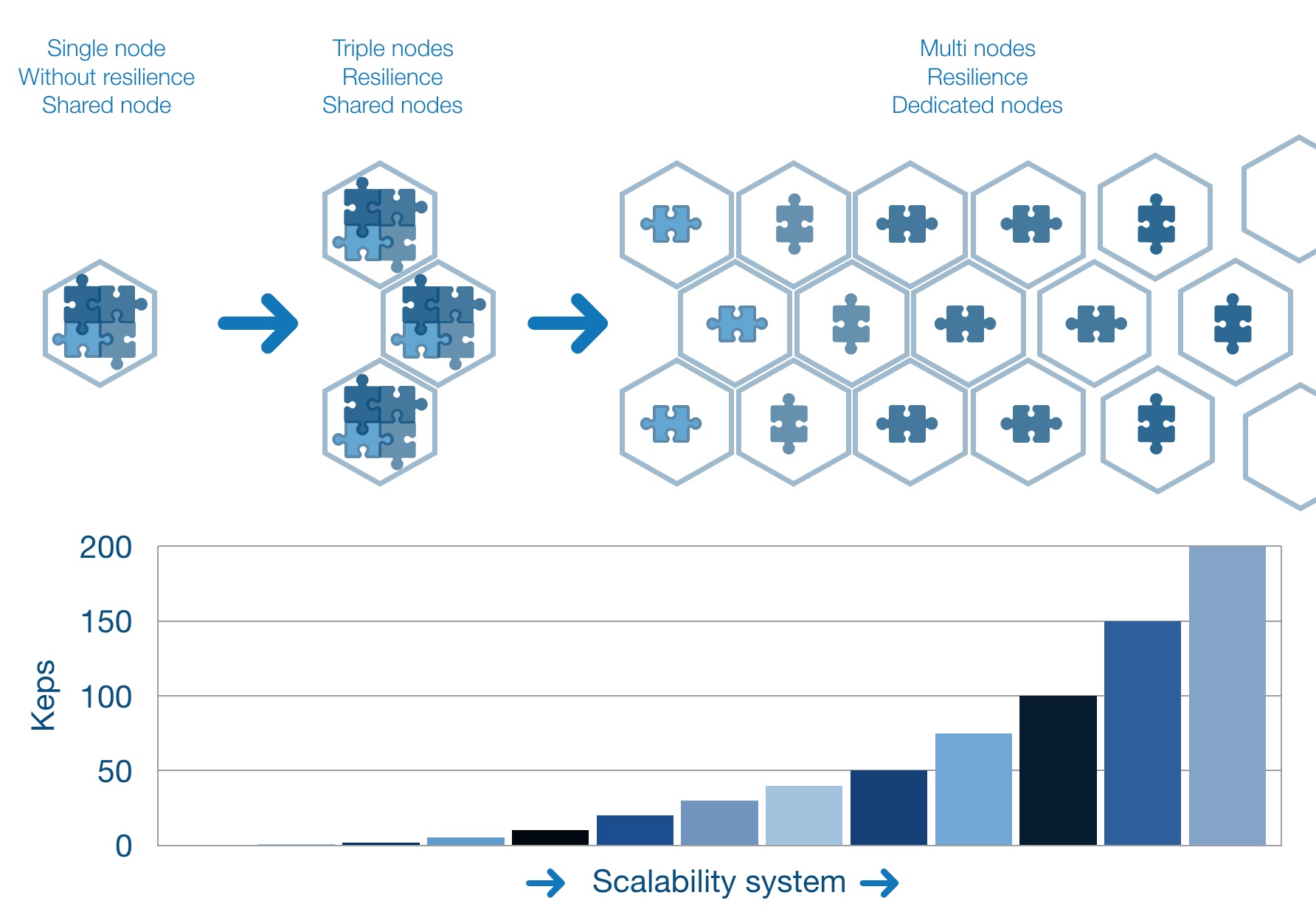

Standelone Punch Sizing

To deal with up to 700 eps, with 1 days of Elastisearch online retention, without resilience or high availability

- 1 virtual shared node with Kafka/Storm/Elasticsearch

- Ressources by node : 4vcpu – 8GoRam – 50GoSSD

This single server setup is available for download on our site. It allows you to test the Punchplatform and get acquainted with this tool.

Cloud Punch Sizing

To deal with up to 1000 eps, with 1 week of Elasticsearch online retention.

- 3 virtual shared nodes with Kafka/Storm/Elasticsearch

- Ressources by node : 4vcpu – 16GoRam – 400GoSSD

This three-servers setup provides a high level of resiliency, is capable of dealing with high traffic peaks (Kafka helps buffering and smooth the traffic). It is especially well adapted to your cloud solutions. The deployment of such a punch takes a few minutes.

This model can grow should your traffic increase. A three server setup is the good starting point. You benefit from all the punch features.

Contained Punch sizing

To deal with up to 5000 eps, hold 3 month of Elasticsearch online data, and up to one year archived data:

- 8 physical nodes, (3 Middle + 3 Back + 2 Admin), with :

- 3 dedicated Kafka nodes

- Ressources : 2vcpu – 4GoRam – 3ToSSD

- 3 dedicated Storm nodes

- Ressources : 2vcpu – 4GoRam

- 12 dedicated Elasticsearch nodes

- Ressources : 2vcpu – 32GoRam – 5ToSSD

- 3 dedicated Kafka nodes

- 6 dedicated Ceph nodes

- Ressources : 4vcpu – 12GoRam – 8To

Specially adapted for your contained solutions.

A higher need based on this physical model implies scalability of resources.

Performance Punch sizing

To deal with more than 5000 eps, keep up to 1 year of online elasticsearch data, and 1 year of Ceph archiving, with secure partitioning.

Multiple physical nodes, (2 Front + x Middle + y Back + 2 Admin), with :

- 2 dedicated LMR nodes

- Ressources : 2vcpu – 4GoRam

- x dedicated Kafka nodes

- Ressources : 4vcpu – 8GoRam – 4ToSSD

- 2x dedicated Storm nodes

- Ressources : 8vcpu – 32GoRam

- 4y dedicated Elasticsearch nodes

- Ressources : 8vcpu – 64GoRam – 6,5ToSSD

- 2y dedicated Ceph nodes

- Ressources : 6vcpu – 16GoRam – 14To

Specially adapted for your big data solutions.

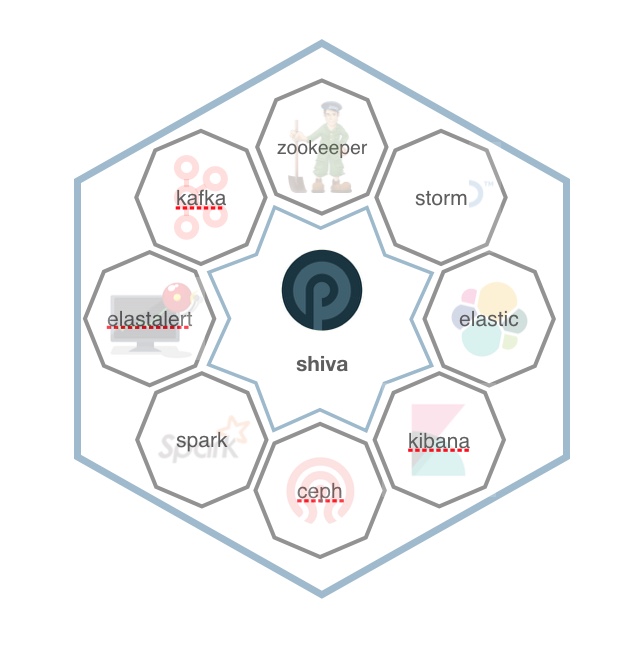

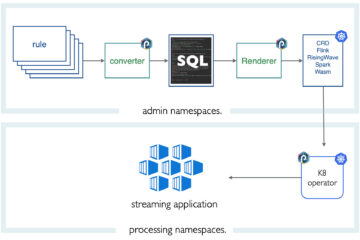

Understanding punchplatform

A punchplatform node is a set of rational services :

- Transport,

- Elasticsearch,

- Kibana,

- Punch,

- IA/ML,

But also a set of additional services :

- Alerting,

- Collectors,

- Parsers,

- Archiving,

- IOC,

- Security,

- Machine learning,

- Spark,

- CEP/Siddhi,

- Forensics.

On small systems punch nodes pool the services. On the big system, they become specific.

Your Punch Sizing

Do not hesitate to contact us to get a precise sizing. It depends on your traffic and retention requirements of course, but also on your required level of resiliency, and most importantly on the applicative usage of your data. Some customers use the punch to only transport their data from one end to another, others (most) performs powerful queries and searches, which requires in turn more resources dedicated to the Elasticsearch clusters.

The punch team is providing professional services to size your initial solution, and to make it grow along with the (likely) increase of traffic you will face.

0 Comments