The PunchPlatform Machine Learning (PML) sdk lets you design and run arbitrary spark applications on your platform data.

Why would you do that ? Punchplatforms process large quantities of data, parsed, normalized then indexed in ElasticSeach in real time. As a result you have powerful search capabilities through Kibana dashboards. In turn, spark applications let you extract more business values from your data. You can compute statistical indicators, run learn-then-predict or learn-then-detect applications, the scope of application is extremely wide, it all depends on your data.

Doing that on your own requires some work. First you have to code and build your Spark pipeline application, taking care of lots of configuration issues such as selecting the input and output data sources. Once ready you have to deploy and run it in cycles of train then-detect/predict rounds, on enough real data so that you can evaluate if it outputs interesting findings. In many case you operate on production system where the real data resides, making it risky should you not master the resources needed by your application.

In short : it is not that easy.

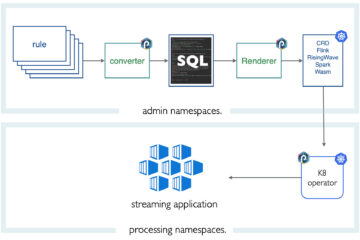

The goal of PML is to render all that much simpler and safer. In a nutshell, PML lets you configure arbitrary spark pipelines. Instead of writing code, you define a few configuration file to select your input and output data, and to fully describe your spark pipeline. You also specify the complete cycle of execution. For example : train on every last day of data, and detect on todays live data.

That is it. You submit that to the platform and it will be scheduled accordingly.

Overview

Benefits

Comparing to developing your own Spark applications, one for each of your machine-learning use-case, working with PMLconfiguration files has key benefits:

- the overall development-deployment-testing process is dramatically speed up.

- once ready you go production at no additional costs.

- all MLlib algorithms are available to your pipelines, plus the ones provided by thales or third party contributors.

- the new ML features from future spark versions will be available to you as soon as available.

- everything is in configuration, hence safely stored in the PunchPlatform git based configuration manager. No way to mess around or loose your working configurations.

- you use the robust, state-of-the art and extensively used Spark MLlib architecture.

In the following we will go through the PML configuration files. Make sure you understand enough of the Spark concepts first.

2 Comments

Punchplatform Machine Learning (PML) for platform monitoring - Punchplatform · August 24, 2018 at 09:48

[…] to machine learning based on Apache Spark: Punchplatform Machine Learning (check out this PML blog). Using Spark standard machine learning library (mllib) and our sets of metrics, we believe it is […]

News From Logstash - Punchplatform · September 23, 2018 at 08:49

[…] Say you need machine learning (batch or stream) processing? Go Spark ! And even better, do not code anything: use PML. […]